Human fears surrounding the future of AI is a hot topic of late. Are you fearful of these kinds of advancements? Or are you a futurephile like me, excited for the future and the exhilarating technologies it will present? If you are on the other side of the fence and perhaps a futurephobe, you’re not alone. In fact, many of us are fearful of AI and even the future as a whole.

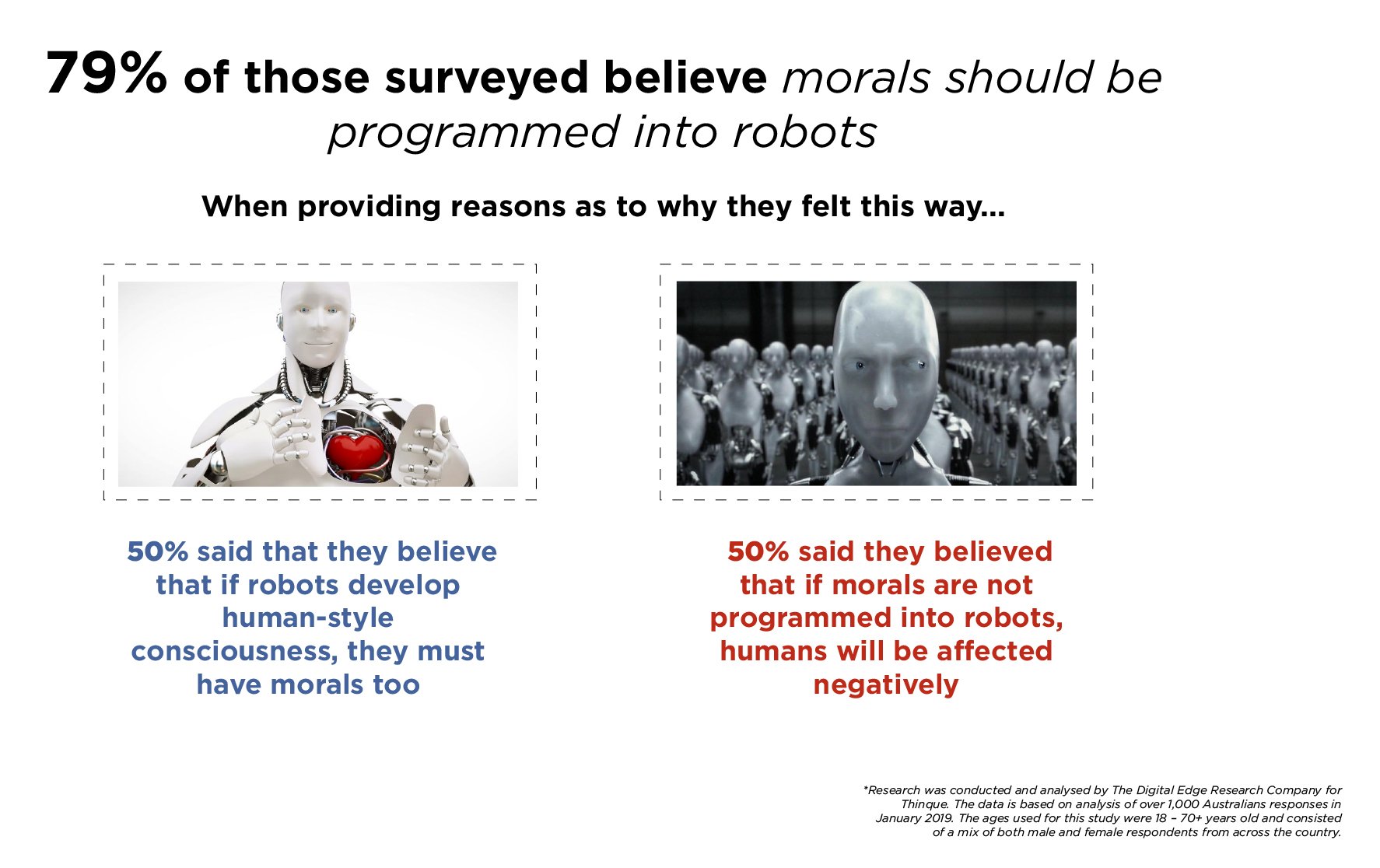

Recent research commissioned by Thinque,* showed that one such fear surrounds ethics and the role ethics play in robotic programming and behaviour. The research revealed that 79% of Australians believe morals should be programmed into robots. When questioned why people felt that this was necessary, respondents said they believe that if robots have human consciousness, they must be equipped with morals too (50%), and that if morals are not programmed into robots, humans will be affected negatively (50%).

.jpg?width=1938&name=who%20should%20be%20responsible%20(1).jpg)

When probed further and asked who should be responsible for programming morals into robots, 59% said the original creator/software programmer should be, followed by the government (20%), the manufacturer (12%) and the company that owns them (9%).

As AI advances and its capabilities become more sophisticated, concerns around how we will manage these developments continue to grow. As such, the need for humans to build an ethical code into robots is necessary if they are to take on more key roles in our lives, such as performing complex medical procedures, driving cars and machinery, or undertaking teaching duties.

In order to be able to effectively programme ethics into AI, humans will have to have a collective set of ethical rules universally agreed upon (a far cry from the current state of the human world). For example, humans worldwide would need to determine whether it is ethically right to pull the lever to redirect a moving trolley that’s headed towards fatally injuring five people who cannot move, rather than killing just one person on its newly diverted path.

.jpg?width=1938&name=work%20roles%20and%20ai%20(1).jpg)

Another dilemma that AI advances raises is that once robots develop to adequately mimic human intelligence, awareness and emotions, we will need to decide if they should then also be granted human-equivalent rights, freedoms and protections.

With the future of AI unfolding rapidly and consumer fear evident, I share my insights into what human citizens can expect to see from robotics companies when it comes to ethics in AI in the near future:

-

They will need to set clear ethical boundaries:

As humans alongside robotics developers, we must collectively determine ethical values that can be coded into and followed by robots. These values will need to encompass all potential ethical problems and the correct way to respond in each situation. For example, driverless cars will need to have an ethics algorithm to help determine for example, that if in an unimaginable circumstance such as a car carrying two children would be swerved and kill two elderly pedestrians in its wake rather than killing the children in a head on collision, or not. Only then will we be able to design robots that can reason through ethical dilemmas the way humans would (and complicating matters of course is that humans cross-culturally don’t yet agree on these philosophical thought experiments at this point in time either!) -

They will also have to factor in the unexpected:

Even after we have set out boundaries to determine ethical behaviour, there will still be numerous ethical ways to handle each situation, as well as unexpected moral dilemmas. For example, a robot needing to decide whether to stop on its way to deliver urgent medical supplies to a hospital to help an injured person it encounters on its way, or not. To ensure robots follow a moral code, as humans do, it would be wise to provide AI with different potential solutions to moral dilemmas, and train them to evaluate and be able to make the best moral decision in any given situation. -

They will have to constantly monitor AI:

As with any technology, programmers will need to ensure that they are constantly monitoring and evaluating ethics in AI so that they are up to date and making the very best decisions possible. Mistakes will inevitably be made, yet programmers should be doing everything possible to both prevent these, as well as redevelop ethical codes to ensure AI is as morally sound as it can be.

How can you protect your staff and business when it comes to AI?

With AI being utilised across a multitude of industries, it’s only a matter of time until your staff and business may be impacted (if it’s not happening already!)

But have you thought about what you’d do if an adverse situation resulted via the use of AI in your workplace? And do you have a plan for this kind of occurrence?

If not, it’s time to think about one, which would include elements such as:

-

Crisis communication plan:

Using robots can streamline processes, save you time and revolutionise a business. However, accidents can happen, leaving a brand’s reputation hanging by a thread. Businesses using AI to complete roles alongside staff members must have a clear crisis communication plan in place to deal with issues swiftly and directly if and when they strike. -

Have procedures in place:

Working alongside AI is relatively new for some. As such, having clear procedures in place around roles and responsibilities is a must, and processes to handle issues is prudent. -

Quality assurance:

As with humans, errors and glitches can also occur when AI is programmed to complete tasks. It’s important for all business to have quality assurance in place to prevent or address customer facing issues with can reflect badly on your brand if not handled correctly.

*Research was conducted and analysed by The Digital Edge Research Company for Thinque. The data is based on analysis of over 1,000 Australians responses in January 2019. The ages used for this study were 18 – 70+ years old and consisted of a mix of both male and female respondents from across the country.

Featured Image credit: Engineers Journal